At the Flow Center of Excellence in Dordrecht, UReason’s Co-founder Jules Oudmans joined Smart AIS, Samp, and the site’s engineering team to put that vision into action. By combining high-fidelity 3D scanning and smart data integration, the team created an interactive digital environment where asset information is easier to see, understand, and act on.

This collaboration supports safer maintenance, faster decision-making, and continuous learning, all grounded in real-time insight.

Here’s how the transformation unfolded, step by step.

Step 1 – Capturing the Facility in 3D

To kick off the collaboration, UReason, Smart AIS, and the Flow Center of Excellence came together on site in Dordrecht to begin creating a high-fidelity 3D model of the facility’s flow loop and surrounding assets.

To map the site with precision, Smart AIS Scanning Engineer Valeria Simon used the NavVis VLX, a wearable mobile mapping system designed to capture detailed 3D spatial data in complex industrial environments. The result was a detailed point cloud of the entire facility, offering a reliable digital snapshot of its current state.

Beyond the visuals, the scan serves practical engineering needs: validating engineering drawings, connecting spatial models to real-time operational data, and improving situational awareness for planning and analysis.

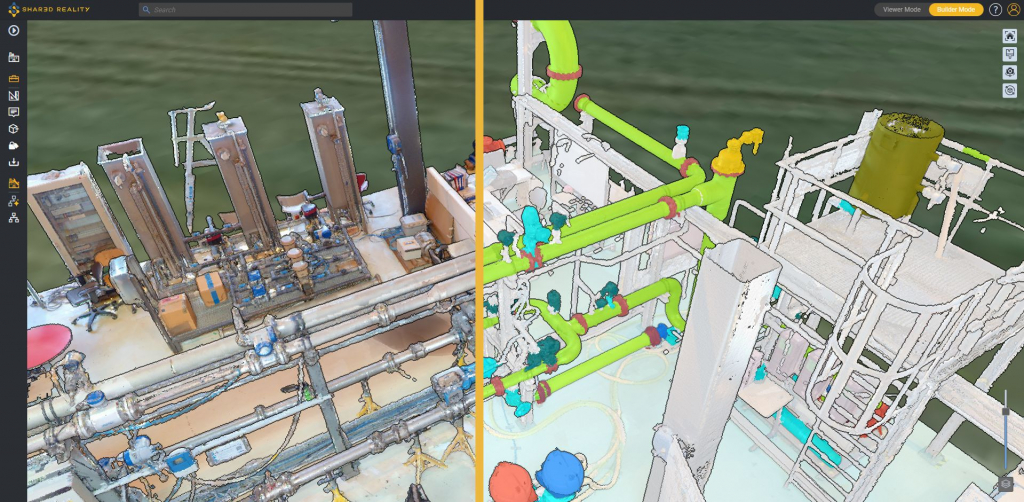

Step 2 – From Scan to Interactive 3D Model

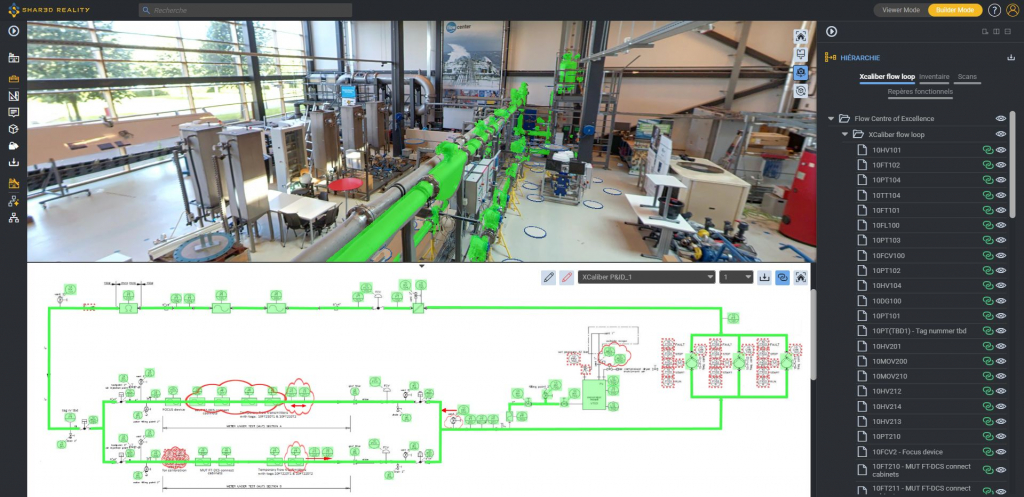

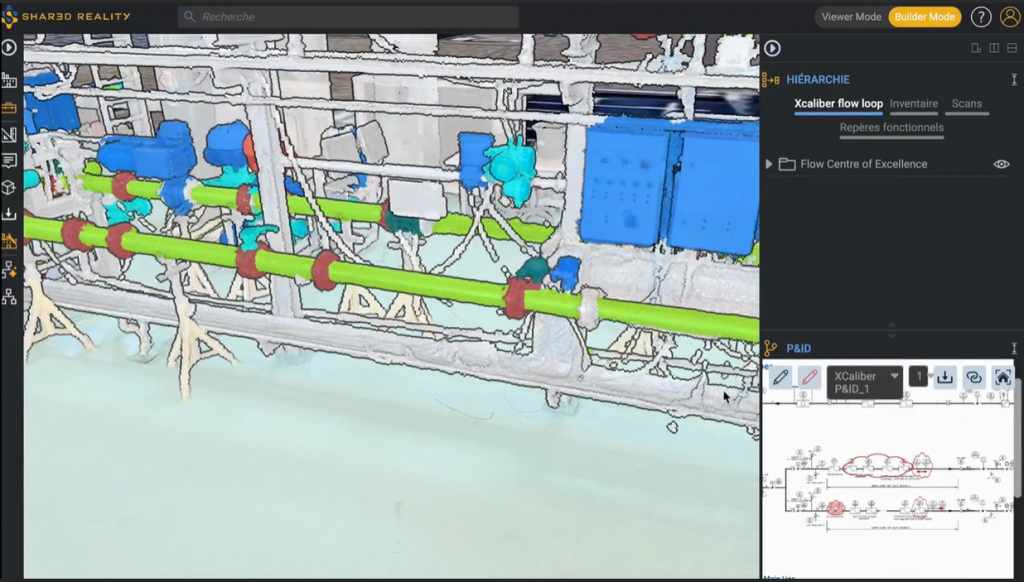

Once the facility scan was complete, Samp’s Shared Reality automatically processed the reality capture data, generating an interactive 3D reality model of the facility’s flow loop and its key components in minutes.

The 3D model gives everyone a consistent visual of the site layout, making it easier to locate equipment, coordinate across teams, and plan changes offsite.

Step 3 – Linking the Model with Technical Data

Using Samp’s Shared Reality, Smart AIS System Engineer Freek Lovink uploaded existing PDF flowsheets and CSV equipment spreadsheets to connect each piece of process equipment and pipe to its corresponding record.

Teams can explore the facility visually while accessing up-to-date technical information. This setup simplifies navigation, improves documentation accuracy, and speeds up updates for training, studies, or modifications.

Step 4 – Turning Insight into Action

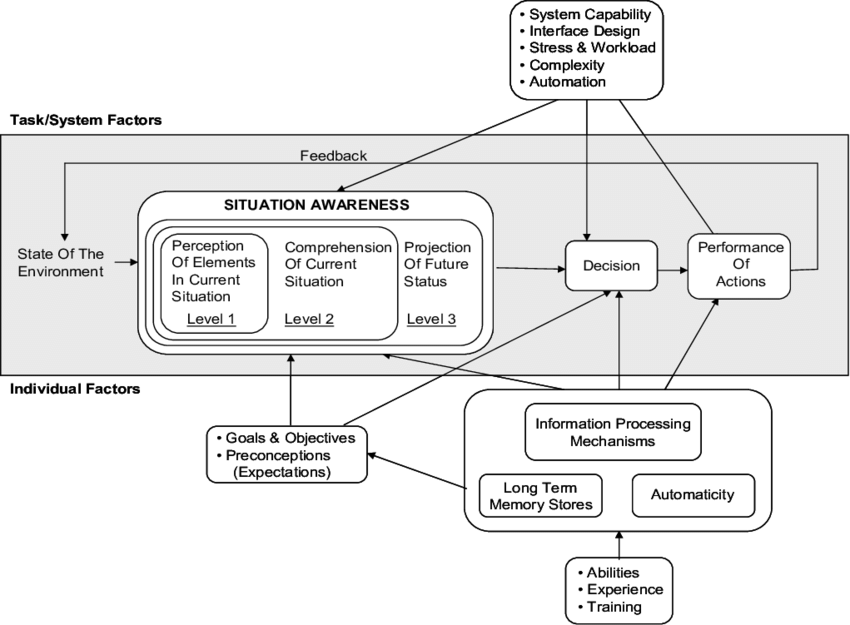

Connecting the 3D reality model to the site’s historian and analytics engine created a unified view that combines 3D visualization, live sensor data, and asset integrity insights. Equipment status is shown directly in 3D, and alerts are placed in context, enabling real-time monitoring, faster decision-making, and more precise issue detection.

This reflects the core stages of Endsley’s Situation Awareness Model: perception of relevant data, comprehension of its meaning, and projection of what may happen next.

Instead of relying solely on field visits or isolated dashboards, teams can remotely assess what’s happening and understand why, within the full spatial and technical context.

It’s a powerful shift from observing issues to truly understanding them and taking action before they escalate. Shared Reality brings together the right information, at the right time, in the right place, accelerating how teams work, learn, and respond.

This setup not only supports immediate decision-making, but also lays the groundwork for predictive strategies as teams scale their data-driven practices.

Bringing It All Together

What started with a facility scan evolved into something more useful: a shared, data-rich environment where spatial models, technical documents, and live insights work together.

UReason supported the project with a diagnostic perspective, helping frame how real-time condition monitoring and contextual information can come together to support smarter maintenance outcomes. The collaboration also reflects UReason’s close relationship with the Flow Center of Excellence and its commitment to advancing practical, connected solutions in the field.

For industrial teams exploring what’s possible, this project shows how existing data and tools can come together in one environment, ready for action.