How better than to illustrate the future of GenAI, specifically LLMs, by asking an LLM 😉.

Question: “What is the future of LLMs in Asset Performance Management applications?”

Output: “The future of Large Language Models (LLMs) in Asset Performance Management (APM) applications is promising and likely to bring significant advancements. Here are some potential developments:

1. Enhanced Predictive Maintenance: LLMs can be utilized to analyze vast amounts of data from various sources, including equipment sensors, maintenance logs, and historical performance data. By processing this information, LLMs can predict equipment failures more accurately, allowing for proactive maintenance and reducing downtime.

2. Optimized Resource Allocation: LLMs can help optimize resource allocation by analyzing historical performance data and current operational conditions. By identifying patterns and trends, LLMs can recommend optimal maintenance schedules, spare parts inventory levels, and technician assignments, leading to cost savings and improved efficiency.

3. Real-time Monitoring and Alerts: LLMs can provide real-time monitoring of asset performance by continuously analyzing incoming data streams. They can quickly detect anomalies or deviations from expected performance and generate alerts for maintenance teams to take corrective action promptly, thereby minimizing the risk of equipment failure and maximizing uptime.

4. Integration with IoT and Edge Computing: LLMs can be integrated with Internet of Things (IoT) devices and edge computing technology to enable real-time analysis of sensor data at the source. By processing data locally, LLMs can reduce latency and improve the speed and accuracy of predictive maintenance algorithms, making APM systems more responsive and efficient.

5. Customized Insights and Recommendations: LLMs can provide customized insights and recommendations based on the specific requirements and operating conditions of different industries and organizations. By understanding the unique characteristics of each asset and environment, LLMs can tailor their recommendations to optimize performance and maximize value for the business.

Overall, the future of LLMs in APM applications holds great promise for improving asset reliability, reducing maintenance costs, and maximizing operational efficiency across various industries. However, it’s essential to address challenges such as data quality, model interpretability, and ethical considerations to fully unlock the potential of LLMs in APM.”

The response by ChatGPT is not all too bad, perhaps a bit overconfident. A weakness of LLMs is dealing with math, although during our research we have seen an improvement with larger models. Remember that LLMs can digest enormous amounts of text and can analyse and extract the structure, topics and logic. Math texts are somewhat more complex, and the structure of a formula follows fewer formal rules and has way more variations than written language.

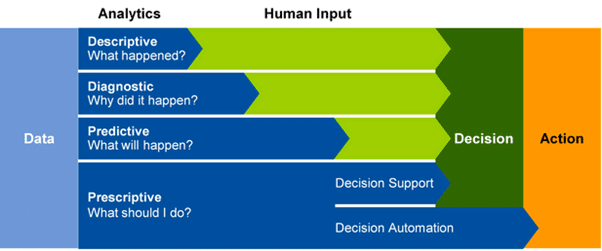

When LLMs are employed in conjunction with information you already have, like general-practices, procedures, asset manuals, maintenance manuals et cetera you can benefit from the time you save in searching for the appropriate information at the appropriate time given the issue(s) you’re dealing with. It is even fair to say that an LLM can fill the Prescriptive Maintenance capability we require with aging workforces, reduced skillsets, and increased asset complexity:

Gartner “Gartner Says Advanced Analytics Is a Top Business Priority”.

LLMs will eventually lead to further automation and autonomy. But our believe is that this will be after broader industry acceptance. LLMs will catalyse the industries developments in the area of Autonomous Operations. Prior to adopting this technology though, you should set forth some clear targets as the number of uses cases are immense. Will you apply LLMs for data insights, user training, knowledge sharing, technical field support. Each case has a different set of requirements, and you also may want to choose different LLM models for different cases.

The future of LLMs in manufacturing industry is immense, obviously at a price of investing time and today some computational power. The list of companies in manufacturing that are applying LLMs is not long. Worst, it is even hard to find examples in manufacturing beyond the “this-is-what-you-could-use-it-for”. We hope that by sharing some of our research you have gained a better understanding of LLMs and UReason.

This is the last blog in this series. If you want access to the full article, fill in the form down below!

Get Full Access of UReason LLM Report Q1/2024

Fill in the form to get full access of our report, including exclusive sections and unreleased chapters